The 5 Eras of AI Convergence

Consumer electronics have been converging for the past few decades. The MP3 Player, alarm clock, digital camera, calculator, maps, watch, GPS, and compass are now all on your smartphone. A single device replaced many separate technologies.

Artificial Intelligence has been converging towards generality. Computers were initially programmed by physically changing their wiring, which required manual intervention to change the programming. The introduction of the John von Neumann architecture allowed programming through code, eliminating the need for physical modifications. The current trend is towards natural language programming, where users can simply give commands to the computer in human language.

As AI has advanced, researchers have been working towards creating more general and flexible systems that can perform a wide range of tasks, culminating in the development of AGI technology. This progression can be divided into five eras, each characterized by different approaches and techniques for achieving greater generality in AI.

First Era: Many Algorithms

In the first era, each area of AI was dominated by a family of algorithms and techniques like, for example, Decision Trees, SVMs, and many others. Understanding how to handcraft features was essential to achieve state of the art in any meaningful task, which meant a lot of specialization was necessary. The algorithms and techniques used to classify the sentiment of a text were completely different than those necessary to recognize a face, for example. It was difficult to transfer your knowledge from working with Natural Language to working with images or audio. Because of this, the machine-learning world was split into silos. In general, the first era of AI was characterized by the use of narrow, task-specific algorithms and techniques that were often hand-engineered by experts in the field.

Second Era: Same Algorithm Many Architectures

Neural Networks started to achieve state-of-the-art in many different tasks in the 2010s. This revolution started when Deep Learning architectures began to dominate the ImagenNet competition. In 2010, state-of-the-art in Image Classification had an error rate of more than 25%, and it went to less than 5% in 2015. The success of Deep Neural networks led to a new era of Machine Learning applications. Many companies like Google, Tesla, and Facebook started to apply Deep Learning to their existing products and also to build entirely new categories like self-driving cars.

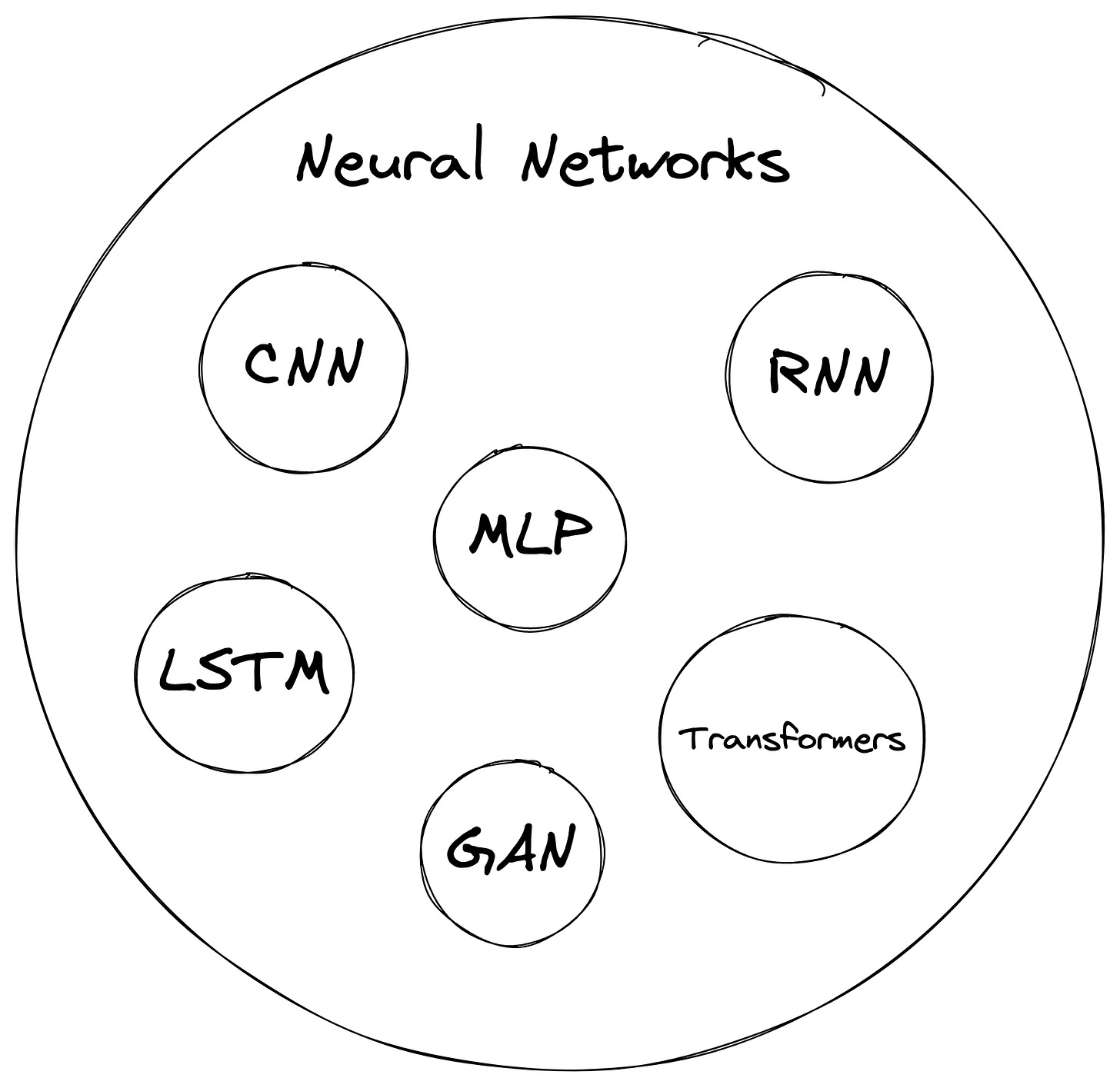

After dominating image-related tasks, Deep Learning Neural Networks started to eat into all categories of Machine Learning. There are many flavors or "architectures" of Neural Networks. In this era, it was common to use one architecture, like CNNs, for images and others, like RNN (Recurrent Neural Networks), for text.

Third Era: Same Algorithm, Same Architecture, and Many Models

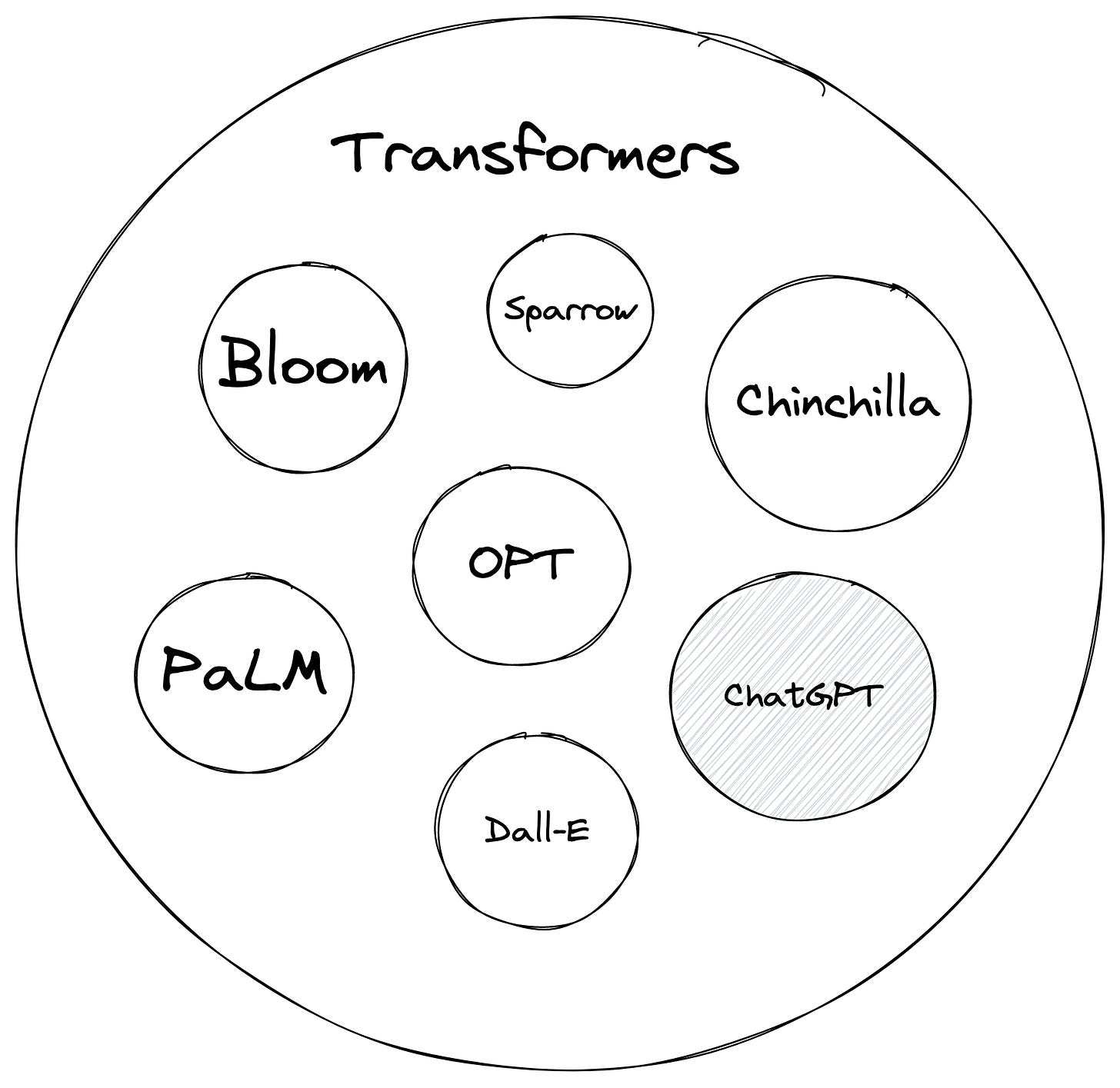

Google's 2017 paper, "Attention is all you need," pushed Transformers to the top of Neural Network architecture conversation. The paper introduced a new Neural Network architecture called Transformers. It was not clear back then that it would start a revolution in AI. The Transformer architecture first devoured NLP (Natural Language Processing) and now has spread to all areas of Machine Learning. You have vision transformers, audio transformers, action transformers, etc. A single architecture has become the leading go-to choice.

Transformers have led to an explosion in new capabilities for AI systems. OpenAI created the increasingly powerful series of GPT models culminating in the ChatGPT being one of the fastest-growing tech products in history.

Fourth Era: Same Algorithm, Same Architecture, Same Model

We are now entering a new phase of AI convergence. Phase IV started with the surprising generality of Large Language Models (LLMs). Those models are trained in vast amounts of data and have the simple goal of predicting the following words in a sequence. Surprisingly the same model can generalize to basically any language task. The same LLM is capable of classifying the sentiment of a tweet, answering questions and writing poems, and many other things. What is most surprising is not only the generality of it but also the fact that the model was not explicitly trained to do those tasks. The paper "Language Models are Few-Shot Learners," released by OpenAI in 2020, kickstarted Phase IV. We will see new models capable of combining language, vision, and audio in the next few years.

Deepmind released the paper "A Generalist Agent" in 2022. They created a new model called Gato capable of doing multiple tasks in different domains. As they put it in the paper:

Inspired by progress in large-scale language modeling, we apply a similar approach toward building a single generalist agent beyond the realm of text outputs. The same network with the same weights can play Atari, caption images, chat, stack blocks with a real robot arm, and much more, deciding based on its context whether to output text, joint torques, button presses, or other tokens.

Gato is not state-of-the-art in the tasks it can do. However, we can imagine that new models will be developed and soon be able to achieve state-of-the-art results in many tasks. Generalist models will slowly gain momentum until we have general foundational models that can achieve any task at the state of the art.

Final Era: The road to AGI

An Artificial General Intelligence (AGI) is a system capable of understanding and learning any intellectual task a human can. AGI has been in the domain of Science Fiction for a long time. For most of my career, AI research focused on "Narrow AI," which is creating AI systems for specific tasks. No one would take you seriously if you said you aimed to build AGI even ten years ago.

More and more people increasingly believe that AGI is an attainable goal. The final era of AI convergence will bring us AGI and start a new era. This time not only for AI but for humanity.